Apple is raising its efforts to ensure the safety and privacy of its users with the recent iOS updates. The company also wants to give further steps to ensure the protection of children using their smartphones. More than that, the company wants to make parents confident that iOS devices are safe for kids. With the upcoming iOS 15.2, the company will give a bold step in this direction. iPhones will be able to detect if an iPhone or iPad user gets or sends a text with sexually explicit photos. This will allow the company to protect kids from sexual predators.

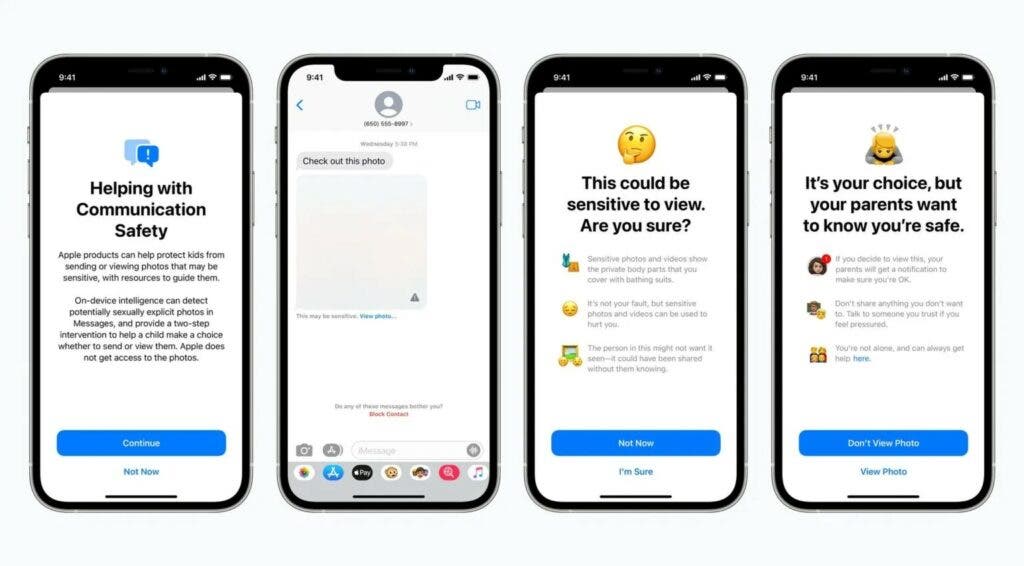

Apple announced way back in August that it will update the iPhone Messages app so that it can detect sexually explicit photos. This Tuesday, the feature went live in iOS 15.2 Developer Beta 2. When the feature is fully available, a child that receives a nude photo in the Messages application on an iPhone or iPad will see a blurred-out image. If the child tries to view it, they will receive an alert that asks, “Are you sure?”.

Gizchina News of the week

The warning message will ensure that a child can understand. It says “Sensitive photos and videos show the private body parts that you cover with bathing suits”. If the child views the image after this, their parents will receive a notification. Interestingly, the same behavior happens if a child attempts to send sexually explicit photos. The child will be warned before the photo is sent. The parents will receive an alert if the child chooses to send it anyway.

The feature will be disabled by default. However, a parent or guardian will be able to enable this. The devices only need to be part of a Family Sharing plan.

Apple will scan photos stored on iPhones and iCloud accounts in search of child abuse

Interestingly, a report emerged a few days ago saying that Apple is planning to scan photos stored on people’s iPhones and iCloud accounts. The goal is to search for imagery suggesting child abuse. The effort will aid in law-enforcement investigations and invite controversial access to user data by government agencies. Worth noting that the new policy to protect kids from sexually explicit content, doesn’t seem to be part of this new policy.

We are particularly curious to see how this situation will escalate. Anyway, as far as the “children protection” goal is concerned, it’s great to see a major company doing some effort to make its software safer