Waymo — formerly the Google self driving car project is an American self driving technology company. The headquarters of this company is in Mountain View, California. According to Waymo, its AI drivers were able to avoid 75% of crashes and 93% of serious injuries. These statistics are contained in two of its recent publications. The company also claims that these statistics are all higher than ideal human driver models of 62.5% and 84%. What is Waymo’s basis for such a precise quantitative description of self driving safety? The point of Waymo’s latest paper isn’t to show off how safe self driving is, at least not at all. AEB (active braking) has become standard, and smart cars have been yearning for it for many years.

However, each company has different standards. How much speed and what kind of obstacles can AEB avoid depends on the standard. Waymo’s real goal is to try to develop a set of norms to define and evaluate whether a self driving system is safe. There is a need for an actual scientific computing system before claiming that self driving is safer than human driving. It is not enough to just carry out road tests and accidents under different conditions.

What is the benchmark for evaluation: Modeling human reaction times

One of Waymo’s contributions is that they have developed a new architectural model. This model can measure and model driver reaction time in real-world road conditions. In fact, simply put, it is to compare the reaction time of a self driving system by the average reaction time of human drivers in emergency situations. This architecture is not only suitable for self driving, but also for other areas of traffic safety.

Specifically, the model is based on two core ideas:

First, in order to avoid a collision, drivers often choose to brake or turn the steering wheel. That is, the reaction time depends on the driver’s anticipation of the current traffic situation. However, there is usually a surprise factor when the driver suddenly faces an obstacle. So, when it adds the “surprise factor”, the reaction time by the driver changes.

Second, the reaction time depends on the dynamically changing traffic environment. There is no one-size-fits-all set time that can be applied to all different scenarios. For example, if the car in front of you brakes suddenly, you can react quickly. Other things being equal, if the car in front slows down slowly, your reaction time will extend accordingly.

Note that the reaction time here refers specifically to the psychological process of the driver deciding whether to brake or turn. It does not include subsequent evasive actions (ie turning the steering wheel or stepping on the brake).

Driver reaction time

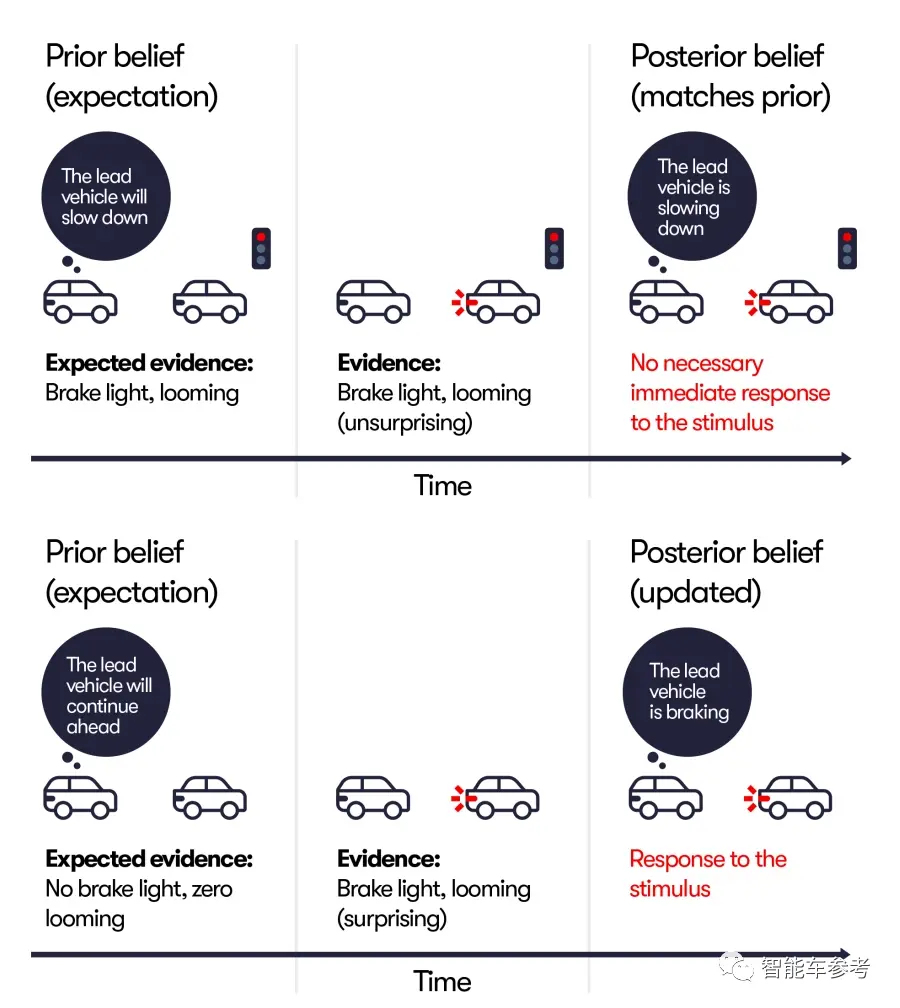

The image above sums up the process. In the upper part of the picture, after seeing the traffic lights, the driver naturally thinks that the car in front needs to brake to slow down. However, the fact is that the car in front did brake and slow down. So the driver’s prediction was correct and matched the actual result. In this case, there is no “surprise” by the driver.

In the lower part of the picture, the driver thought that the car in front was going to continue to move forward. However, the fact is that the car in front suddenly braked. This is inconsistent with his psychological expectations, and there was an iterative update of cognition.

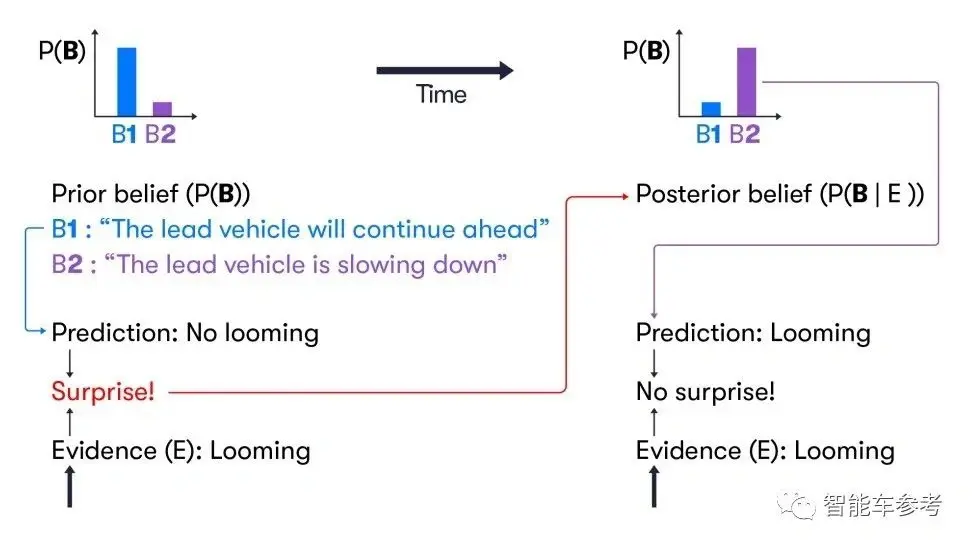

The above image looks at the process of cognitive transformation. The emergence of this model architecture is mainly to solve the two major limitations of the previous reaction time modelling:

- The reaction time is too dependent on the surrounding environment

- How to define “stimulus” clearly.

Waymo hopes to measure the reaction time of humans from seeing obstacles to pressing the brakes in a real road environment, facing various intricate driving environments. Under the traditional method, the analysis of reaction time is generally based on a specific controllable experiment. Here, it is not possible to clearly define when the “stimuli” in common traffic accidents are triggered. With such a more rigorous reaction time benchmark model, the performance of the self driving system can be evaluated.

Human driver as a reference model

For Waymo to effectively test its AI drivers, it needs a human reference. This is where NIEON comes in. NIEON means Non-Impaired Eyes ON the conflict. It is a reference model, an ideal human driver. This means that NIEON drivers do not have any intellectual, hearing or visual impairments. They stay focused throughout the entire driving process, and will not be distracted or drowsy.

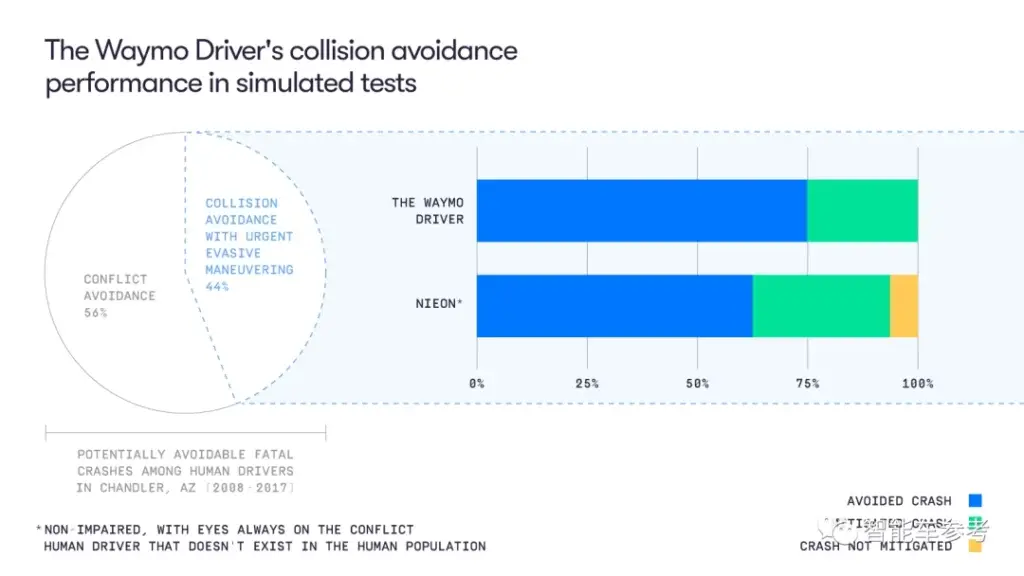

Comparing Waymo’s AI driver with the NIEON model, the results are:

After 16 simultaneous traffic accidents, Waymo’s automatic driving system was able to avoid 12 collisions. This represents an avoidance probability of 75%.

In contrast, the ideal NIEON model avoids 10 collisions with a probability of avoidance of 62.5%.

At the same time, Waymo’s self-driving system can reduce 93% of serious injuries due to collisions. However, the NIEON model can only reduce 84%.

That’s why Waymo came to the conclusion that their self-driving AI drivers are safer than human drivers. However, Waymo emphasizes that this conclusion is especially true for older drivers. The paper also says that a behavioural reference model similar to NIEON can be used as a benchmark to judge the quality and safety of a set of ADS self driving.

Waymo’s study limitations

As for whether the test results are reliable, Waymo officials also list four limitations.

- First, the dataset involves collisions that are primarily caused by humans. Of course, it’s important to consider how the self driving system can handle these known, human-induced crashes correctly. It is also important to test the system’s ability to avoid similar behaviour. However, not all crashes are human-induced.

- Second, the study only modelled reconstructions based on police-reported crashes. The number of crashes recorded in official documents may differ from reality.

- Third, the current study is only based on a single NIEON model operation to judge the quality of Waymo’s self-driving system.

- Fourth, the performance of the entire self driving system is tested in a simulated environment and under different conditions. For some specific scenarios, this is not suitable.

What’s holding back self driving?

The popularization of self driving technology is facing a hard time. One of the common questions is why self driving is not getting approval for use. The simple answer to this question is that the regulations are imperfect. Also, the divisions of the rights and responsibilities for self driving vehicles are not clear. Why is the division of responsibility for self driving unclear in today’s L2-L3 stage? Quite simply, because the current self driving systems are not perfect enough to be “foolproof”. They require humans to be ready to take over. This is the part that remains unclear. At what point will humans take over?

There is no qualitative and quantitative standard to define under what circumstances humans need to take over the system. Naturally, it is impossible to clearly divide rights and responsibilities legally. Thus, the imperfect regulations are a result of the lag in the entire self driving industry. The industry has never provided the legislature with technical standards that can work at the legal level.

To clear the obstacles to the implementation of self driving at the regulatory level, it is necessary to give exact and rigorous definitions in terms of system reliability, road complexity, system capability boundaries, human intervention conditions, and critical points of system failure.