OpenAI recently issued a press release which confirms the upgrade of its GPT-4 Turbo preview model. In the press release, the company also optimized the performance of existing models and released a new embedded model. The release and upgrade include two text embedding models, an upgraded GPT-4 Turbo preview, a GPT-3.5 Turbo upgrade, and an audit model.

Updated GPT-4 Turbo preview model

Based on developer feedback on the early preview version, OpenAI released the gpt-4-0125-preview preview model. This model focuses on fixing the “slow” loading of the model to more thoroughly complete tasks such as code generation.

The new preview also improves support for non-English generated issues and introduces a “gpt-4-turbo-preview” model alias that automatically points to the latest preview. OpenAI also plans to fully launch GPT-4 Turbo with vision capabilities in the coming months.

New embedded model with lower pricing

OpenAI also introduces two new embedding modes, one is a smaller and more efficient text-embedding-3-small model, and the other is a larger and more powerful text-embedding-3-large model.

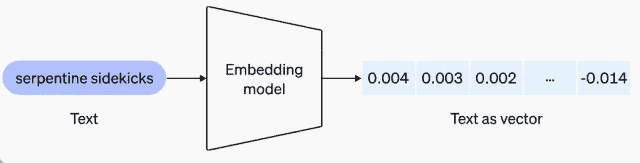

An embedding is a sequence of numbers that represents a concept in something like natural language or code. Embedding makes it easier for machine learning models and other algorithms to understand how content is related and to perform tasks such as clustering or retrieval.

Applications such as knowledge retrieval in ChatGPT and Assistants API, as well as many retrieval augmentation generation (RAG) development tools, use the concept of embedding.

Text-embedding-3-small

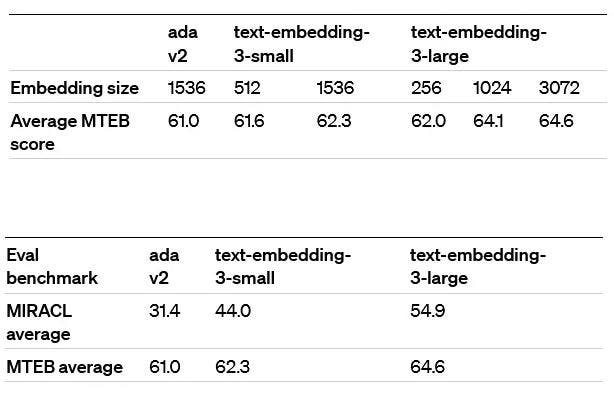

Compared with the text-embedding-ada-002 model released in December 2022, the performance and efficiency of text-embedding-3-small have been greatly improved.

In terms of performance, the average score of text-embedding-3-small on the Multilingual Retrieval Common Benchmark (MIRACL) increased from 31.4% to 44.0%. The average score on the Common English Task Benchmark (MTEB) increased from 61.0% to 62.3%.

In terms of pricing, the pricing of text-embedding-3-small is one-fifth that of text-embedding-ada-002, falling from $0.0001 per 1k token to $0.00002.

Text-embedding-3-large

Text-embedding-3-large is the new best-performing model launched by OpenAI. Comparing text-embedding-ada-002 with text-embedding-3-large: on MIRACL, the average score improves from 31.4% to 54.9%. However, on MTEB, the average score improves from 61.0% to 64.6%. Text-embedding-3-large is priced at $0.00013 per 1k tokens.

Native support for shortening

The native support for shortening allows developers to trade lower storage and computing requirements for a certain level of accuracy. Simply put, shortening is like removing some less important details from a complex label while keeping the gist.

GPT-3.5 Turbo

In the API field, GPT-3.5 Turbo also performs well. When performing various tasks, it not only provides lower cost than GPT-4 but also ensures faster execution speed.

Therefore, for those paying users, the 50% reduction in input prices and 25% reduction in output prices is undoubtedly a big benefit. Specifically, the new input price is fixed at $0.0005 per 1k token, while the output price is set at $0.0015 per 1k token.

Final Words

OpenAI’s recent press release heralds significant advancements in their AI models and services. The upgrade to the GPT-4 Turbo preview model addresses developer feedback, focusing on enhancing performance and language support, especially for non-English contexts. Moreover, the introduction of new embedded models, including text-embedding-3-small and text-embedding-3-large, showcases improvements in efficiency and performance metrics like MIRACL and MTEB scores.

The native support for shortening offers developers flexibility in balancing accuracy and resource requirements. Additionally, the optimization of GPT-3.5 Turbo brings cost-effective solutions with reduced input and output prices, catering to paying users’ needs while ensuring faster execution. Overall, these updates underscore OpenAI’s commitment to advancing AI capabilities and accessibility.