NVIDIA has recently introduced a groundbreaking AI chatbot, Chat with RTX. It is designed to run locally on Windows PCs equipped with NVIDIA RTX 30 or 40-series GPUs. This innovative tool allows users to personalize a chatbot with their content. It keeps sensitive data on their devices and avoids the need for cloud-based services. The “Chat with RTX” chatbot is designed as a localized system that users can use without access to the Internet. All GeForce RTX 30 and 40 GPUs with at least 8 GB of video memory support the app.

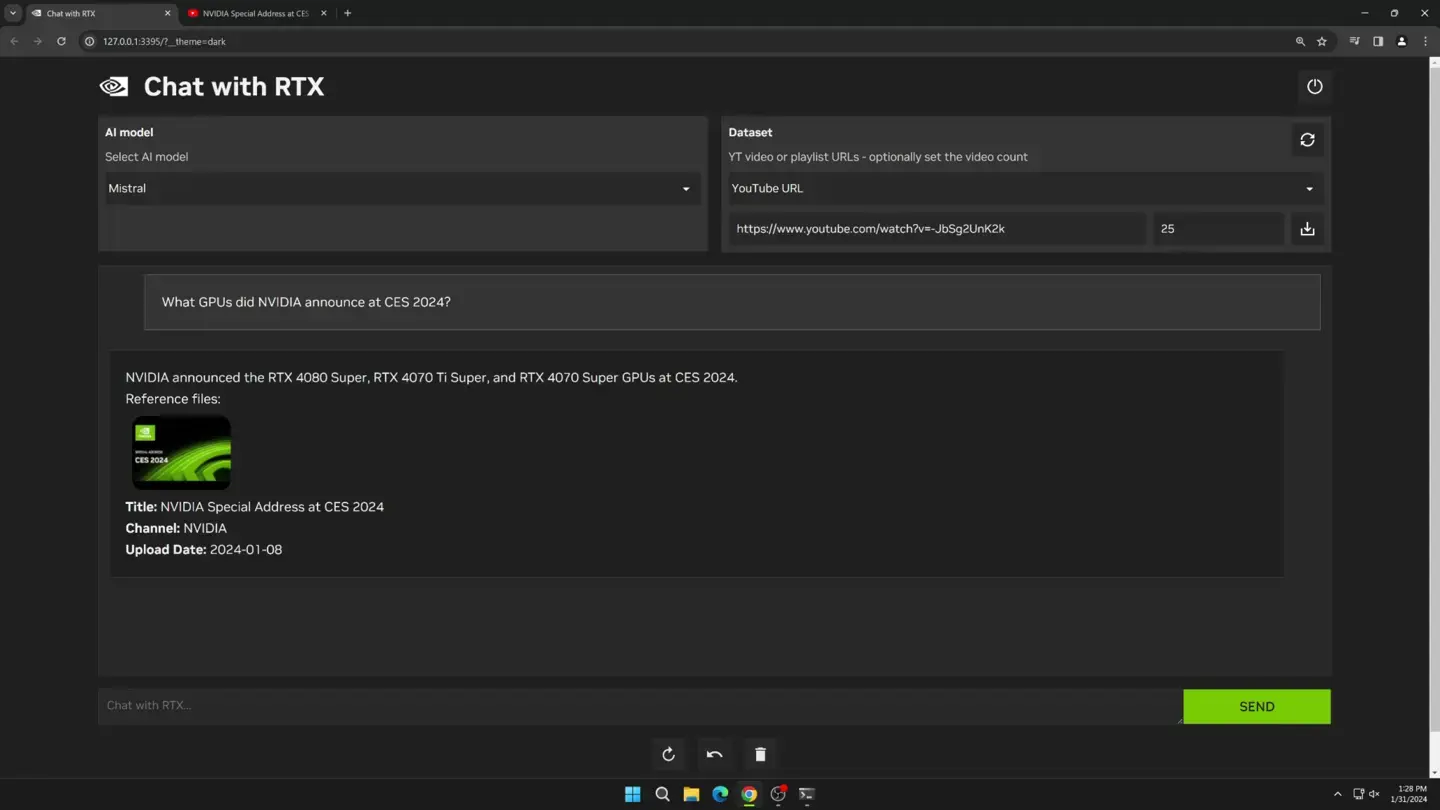

Chat with RTX supports multiple file formats, including text, pdf, doc/docx, and XML. Just point the app to the folder containing the files and it will load them into the library in seconds. Also, users can provide the URL of a YouTube playlist and the app will load the transcripts of the videos in the playlist. This allows the user to query the content they cover.

Judging from the official description, users can use Chat With RTX in the same way as using ChatGPT through different queries. However, the generated results will be entirely based on specific data sets. This seems to be more suitable for operations such as generating summaries and quickly searching documents.

Having an RTX GPU with TensorRT-LLM support means users will work with all data and projects locally. Thus, there will be no need for users to save their data in the cloud. This will save time and provide more accurate results. Nvidia said that TensorRT-LLM v0.6.0 will improve performance by 5 times and will be launched later this month. Also, it will support other LLMs such as Mistral 7B and Nemotron 3 8B.

Key Features of Chat with RTX

- Local Processing: Chat with RTX runs locally on Windows RTX PCs and workstations, providing fast responses and keeping user data private.

- Personalization: Users can customize the chatbot with their content, including text files, PDFs, DOC/DOCX, XML, and YouTube videos.

- Retrieval-Augmented Generation (RAG): The chatbot utilizes RAG, NVIDIA TensorRT-LLM software, and NVIDIA RTX acceleration to generate content and provide contextually relevant answers.

- Open-Source Large Language Models (LLMs): Users can choose from two open-source LLMs, Mistral or Llama 2, to train their chatbot.

- Developer-Friendly: Chat with RTX is built from the TensorRT-LLM RAG developer reference project, available on GitHub, allowing developers to build their RAG-based applications.

Requirements and Limitations

- Hardware Requirements: Chat with RTX requires an NVIDIA GeForce RTX 30 Series GPU or higher with at least 8GB of VRAM, Windows 10 or 11, and the latest NVIDIA GPU drivers.

- Size: The chatbot is a 35GB download, and the Python instance takes up around 3GB of RAM.

- The chatbot is in the early developer demo stage, thus it still has limited context memory and inaccurate source attribution.

Applications and Benefits

- Data Research: Chat with RTX can be a valuable tool for data research, especially for journalists or anyone who needs to analyze a collection of documents.

- Privacy and Security: By keeping data and responses restricted to the user’s local environment, there is a significant reduction in the risk of exposing sensitive information externally.

- Education and Learning**: Chat with RTX can provide quick tutorials and how-tos based on top educational resources.

Conclusion

Chat with RTX is an exciting development in the world of AI, offering a locally run, personalized chatbot that can boost worker productivity while reducing privacy concerns. As an early developer demo, it still has some limitations, but it shows the potential of accelerating LLMs with RTX GPUs and the promise of what an AI chatbot can do locally on your PC in the future. What do you think about this new feature? Let us know your thoughts in the comment section below