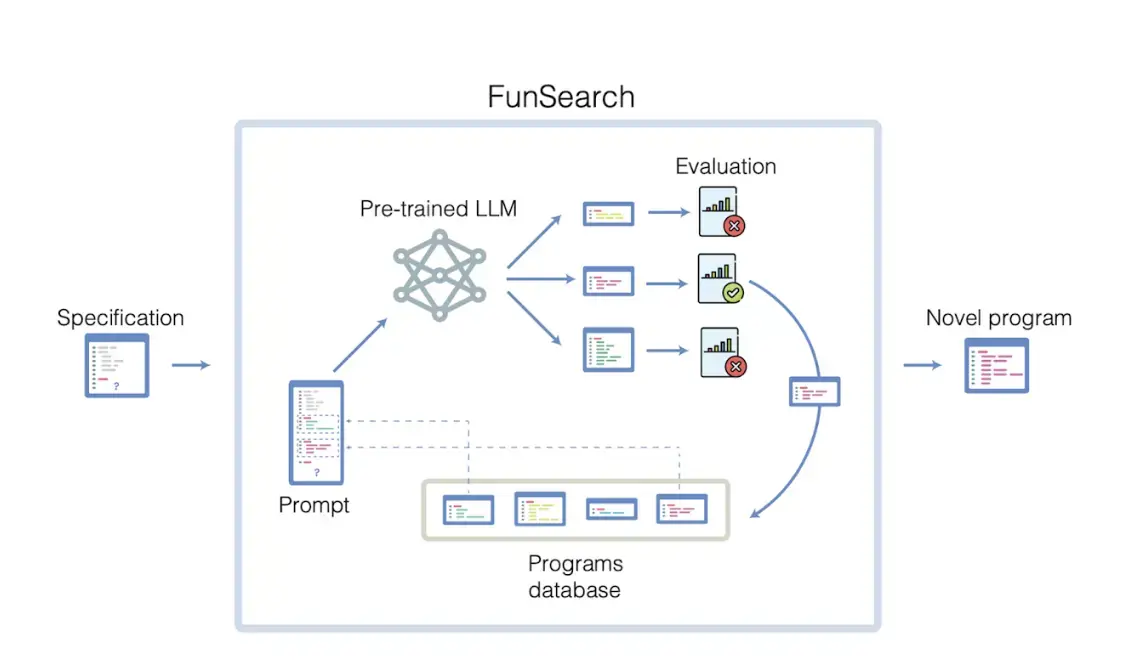

Google DeepMind has introduced a new method called FunSearch. This method uses Large Language Models (LLMs) to search for new solutions in mathematics and computer science. The method is described in a paper published in Nature. FunSearch is an evolutionary method that promotes and develops the highest-scoring ideas which reflects as computer programs. The running and evaluation of these programs are automatic. The system selects some programs from the current pool of programs, which are fed to an LLM. The LLM creatively builds upon these and generates new programs, which are automatically evaluated. The best ones are added back to the pool of existing programs, creating a self-improving loop. FunSearch uses Google’s PaLM 2, but it is compatible with other LLMs trained on code.

According to Google, “FunSearch” can calculate “upper-limit problems” and a series of “complex problems involving mathematics and computer science”. FunSearch model training method mainly introduces an “Evaluator” system for the AI model. The AI model outputs a series of “creative problem-solving methods” and “evaluation methods”. The “processor” is responsible for judging the problem-solving method output by the model. After multiple iterations, an AI model with stronger mathematical capabilities can join the training.

Google DeepMind used the PaLM 2 model for testing. The researchers established a dedicated “code pool”, used code form to input a series of questions for the model, and set up an evaluator process. After that, the model would automatically be drawn from the code pool in each iteration. Select problems, generate “creative new solutions” and submit them to the evaluator for evaluation. The “best solution” will be re-added to the code pool and start another iteration.

How FunSearch Works

FunSearch uses an iterative procedure. First, the user writes a description of the problem in the form of code. This description comprises a procedure to evaluate programs, and a seed program used to initialize a pool of programs. At each iteration, the system selects some programs from the current pool of programs, which are fed to an LLM. The LLM creatively builds upon these and generates new programs which get automatic evaluation. The best ones are added back to the pool of existing programs, creating a self-improving loop. FunSearch uses Google’s PaLM 2, but it is compatible with other LLMs trained on code.

Discovering New Mathematical Knowledge

Discovering new mathematical knowledge and algorithms in different domains is notoriously and largely beyond the power of the most advanced AI systems. To tackle such challenging problems with FunSearch, there is a need to use multiple key components. FunSearch generates programs that describe how those solutions were arrived at. This show-your-working approach is how scientists generally operate, with discoveries or phenomena explained through the process used to produce them. FunSearch favours finding solutions represented by highly compact programs – solutions with a low Short program can describe very large objects, allowing FunSearch to scale.

Google said the FunSearch training method is particularly good at “Discrete Mathematics (Combinatorics)”. The model trained by the training method can easily solve extreme value combinatorial mathematics problems. The researchers introduced in a press release a process method for model calculation of “upper-level problems (a central problem in mathematics involving counting and permutations).”

FunSearch and the Bin Packing Problem

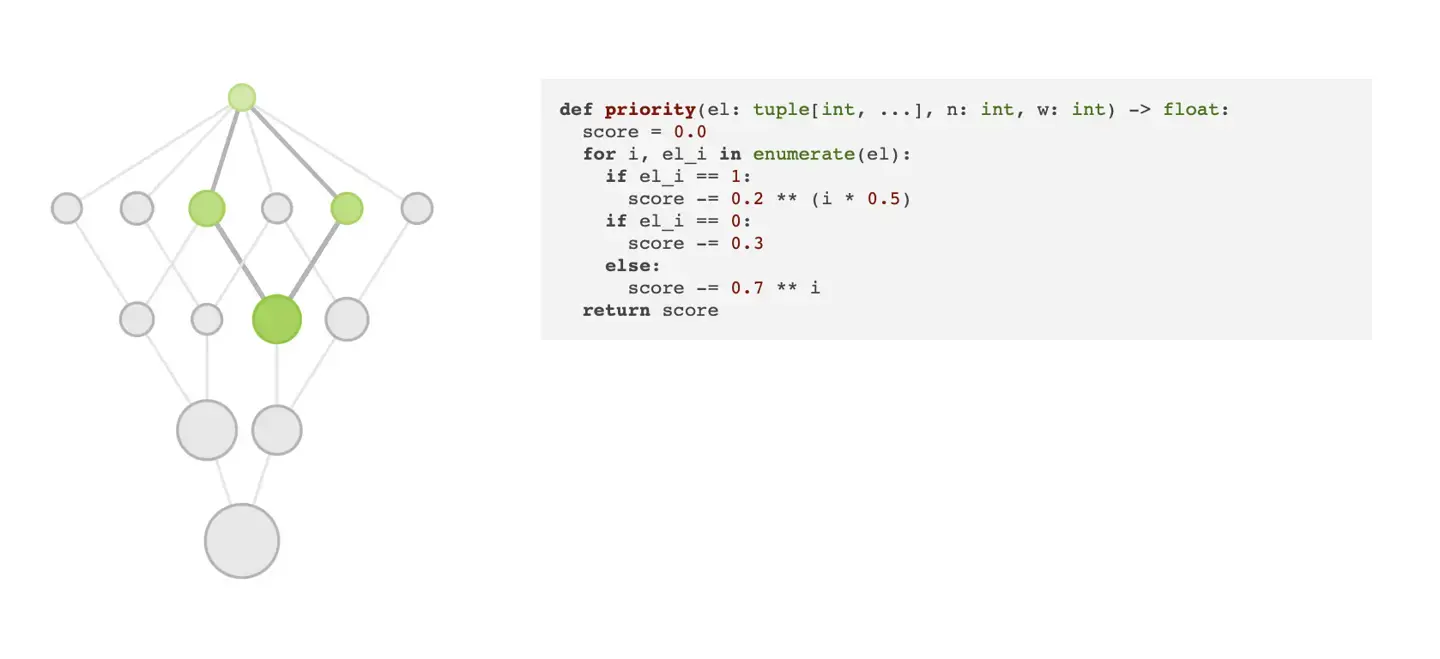

To test its versatility, the researchers used FunSearch to approach another hard problem in math: the bin packing problem, which involves trying. The researchers left out the lines in the program that would specify how to solve it. That is where FunSearch comes in. It gets Codey to fill in the blanks—in effect, to suggest code that will solve the problem. A second algorithm then checks and scores what Codey comes up with. The best suggestions—even if not yet correct—are saved and given back to Codey, which tries to complete the program again. “Many will be nonsensical, some will be sensible, and a few will be decent,” says Kohli. “You take those truly inspired ones and you say, ‘Okay, take these ones and repeat.’”.

The “Bin Packing Problem” is a problem of “putting items of different sizes into a minimum number of containers”. FunSearch provides a solution for the “Bin Packing Problem”. This is a “just-in-time” solution that generates a program that “automatically adjusts based on the existing volume of the item.” Researchers mentioned that compared with other AI training methods that use neural networks to learn, the output code of the model trained by the FunSearch training method is easier to check and deploy. This means that it is easier to be integrated into the actual industrial environment.

FunSearch and Set-Inspired Problems

The AI system, called FunSearch, made progress on Set-inspired problems in combinatorics. This is a field of mathematics that studies how to count the possible arrangements of sets. FunSearch automatically creates requests for a specially trained LLM, asking it to write short computer programs that can generate solutions to a particular scenario. The system then checks quickly to see whether those solutions are better than known ones. If not, it provides feedback to the LLM so that it can improve at the next round. “The way we use the LLM is as a creativity engine,” says DeepMind computer scientist Bernardino. Not all programs that the LLM generates are useful, and some are so incorrect that they wouldn’t even be able to run, he says.

“What I find really exciting, even more so than the specific results we found, is the prospects it suggests for the future of human-machine interaction in math. “Instead of generating a solution, FunSearch generates a program that finds the solution. A solution to a specific problem might give me no insight into how to solve other related problems. But a program that finds the solution… Artificial intelligence researchers claim to have made the world’s first scientific discovery using a breakthrough that suggests the technology behind ChatGPT and similar programs can generate.

Conclusion

FunSearch is a new method that uses Large Language Models (LLMs) to search for new solutions in mathematics and computer science. The details of the description of this method are in an academic paper in Nature, a top academic journal. FunSearch is an evolutionary method that promotes and develops the highest-scoring ideas in computer programs. The process of running and evaluating these programs is automatic. The system selects some programs from the current pool of programs, which are fed to an LLM. FunSearch uses Google’s PaLM 2, but it is compatible with other LLMs that use the same code for training. FunSearch can improve manufacturing algorithms thereby optimizing logistics, and reducing energy consumption.

Author Bio

Efe Udin is a seasoned tech writer with over seven years of experience. He covers a wide range of topics in the tech industry from industry politics to mobile phone performance. From mobile phones to tablets, Efe has also kept a keen eye on the latest advancements and trends. He provides insightful analysis and reviews to inform and educate readers. Efe is very passionate about tech and covers interesting stories as well as offers solutions where possible.