Google’s New AI Actually Looks Closer Instead of Making Stuff Up

GoogleWednesday, 28 January 2026 at 22:02

Google DeepMind is hooking up Gemini 3 Flash with a dope new way to peep pics. Normally, AI just

skims an image real quick. If details are blurry—like a tiny-ass serial number

or a far-off sign—it’s straight-up guessing. But Agentic Vision flips that

script. Instead of winging it, the AI zooms in close, digs deep, like a human

squinting to figure shit out.

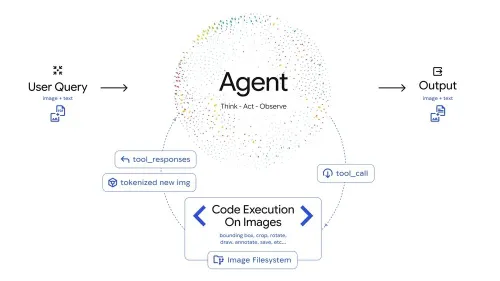

Thinking Before Looking

Instead of being a

passive observer, the AI goes through a cycle of three actions. The first

action is that the AI thinks about your question and looks at the entire

picture. If it realises it can't see everything clearly, it takes action. It

actually writes its own computer code to zoom in, crop, or rotate the image.

Finally, it looks at the new, clearer view to get the right answer. This

"Think-Act-Observe" loop means the AI doesn't have to guess anymore.

It goes out and finds the proof it needs.

Solving Hard Problems

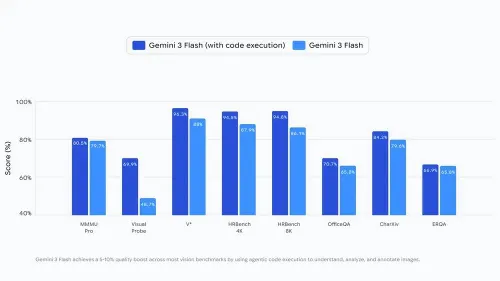

This new approach is

already making a huge difference in the real world. In technical tests, the

AI's accuracy jumped significantly. For people working with complex blueprints,

the AI can now zoom in on tiny architectural details to make sure everything is

perfect. It is also a game-changer for math. If you show it a complicated

chart, it doesn't just look at the lines. It writes code to extract the raw

data and creates its own precise graph to double-check the facts.

Read also

A Natural Way to See

Google DeepMind says

this is only the start. Right now, the AI is already getting very good at

knowing when to "magnify" a detail. In the future, these models will

be even more independent. The AI will be able to complete complicated visual

tasks on its own without you having to instruct it to look closely. This gives

the impression that the technology is not as robotic as it is made out to be.

Loading