At present, Meta has made some technical progress on VR headsets. However, it still encounters many technical bottlenecks that it has no solution to at the moment. In his speech at SIGGRAPH, Douglas Lanman, Head of Display Systems Development at Meta, gave us some pointers. According to him, there are ten challenges that need solutions to take the VR experience to the next level. Whoever can take the lead in breaking these challenges should gain the upper hand in the increasingly fierce Metaverse Hardware Entry Competition.

Meta VR headsets bottlenecks

1. Resolution: Meta develops a Butterscotch prototype to improve resolution

To increase user immersion and make virtual worlds look more realistic and clear, VR headsets must have higher resolutions. The resolution of current VR headsets is very far from the level of human vision. Meta says their initial goal is to achieve 8K resolution per eye and 60PPD (pixel density). But at present, Quest 2 only supports 2K resolution and 20PPD per eye, which is still a long way from this goal.

To increase the resolution of VR headsets, Meta developed a Butterscotch prototype that focuses on exploring resolutions. In this regard, the development and production of high-resolution displays are not difficult. The difficulty lies in how to equip high-resolution displays with the corresponding computing power. Lanman points out that two technologies, Foveated Rendering and Cloud streaming, may help to improve computing power. However, the development of these two technologies is also very difficult.

2. Field of View: Meta VR headsets FoV is twice as small as that of the human eye

The average human horizontal field of view is 200 degrees. Unfortunately, most current commercial VR headsets have a horizontal field of view of 100 degrees. This means that the field of view of a VR headset is narrower than what we see in a real environment. In addition, there is room for improvement in VR headsets in terms of vertical field of view.

The wider the field of view, the more pixels the VR headset can render. This requires higher power and more waste heat. In addition, when the line of sight is widened, the image at the edge of the line of sight is easily distorted. Therefore, a wider line of sight requires better lens technology support. And, the researchers also want to make sure that the lenses and display are built in a way that doesn’t increase the VR headset’s form factor.

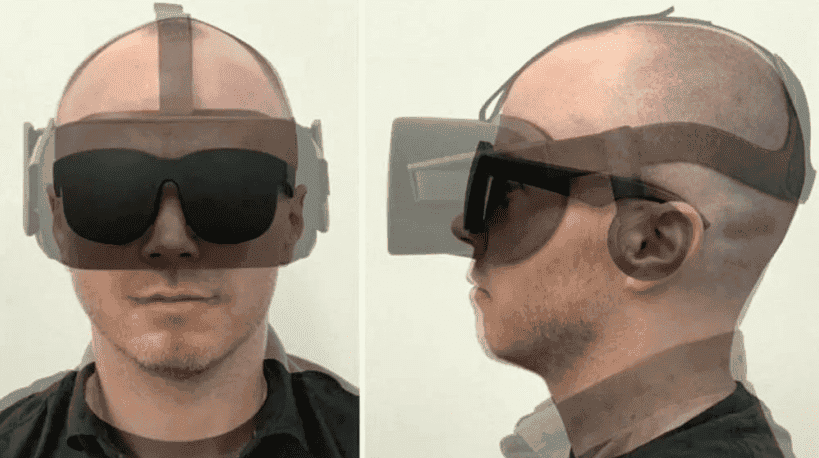

3. Ergonomics: VR headsets are not comfortable enough to wear

Most current VR headsets tend to be slightly “clunky”, such as the Quest 2, which weighs over 500 grams and has a device thickness (protruding from the face) of 8cm. Such a device will obviously not bring a good wearing experience to the user. The ideal VR device should be thinner and lighter, allowing users to wear it comfortably for long periods of time. Pancake lenses and holographic lenses may help reduce the size of the headset, and Meta’s Holocake 2 prototype is currently exploring this direction. However, it has not yet developed to the level of mass production.

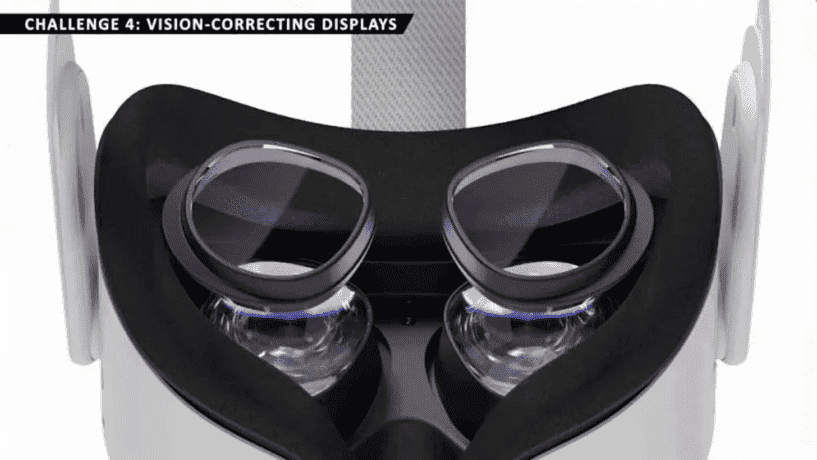

4. Vision correction display: No low-cost vision correction display for now

Better VR headsets should have vision correction features that allow users to not have to wear regular glasses under the VR headset. This problem can be solved with special lens attachments, but this is not an ideal solution. The best solution is for the headset’s display to have its own vision correction function so that the headset designer no longer needs to consider issues such as fitting glasses. But the challenge is how to find a solution that is cheap to manufacture and doesn’t add extra weight to the headset.

5. Zoom: Meta prototype Half Dome can achieve human eye zoom

In the natural environment, in order to see pictures at different distances, the human eye can naturally zoom. But in VR headsets, because the distance between the human eye and the screen does not change, the human eye cannot zoom or see the difference between near and far objects. There is a vergence-accommodation-conflict problem. So sometimes users experience eye fatigue, even headaches and vomiting. To solve this problem, researchers at Meta have developed Half Dome, a series of prototypes that support “progressive vision”. Half Dome can simulate different focal planes, showing different degrees of blur, thus helping the human eye to adjust the focus in the virtual world.

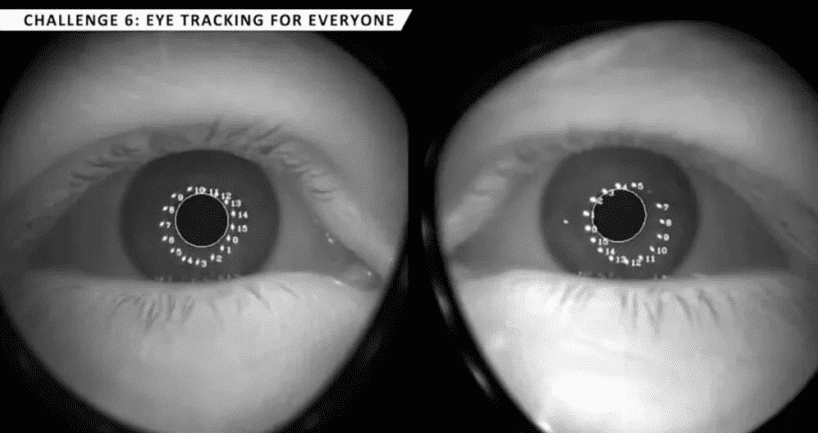

6. Eye tracking: it is difficult to adapt to everyone’s eye conditions

Eye tracking is a key technology in virtual reality. It is the basis for many other important VR technologies, such as zoom, distortion correction, and more. Through this technology, users can achieve eye contact with other users in the virtual world and have a more realistic social experience. The problem is that everyone has a different pupil shape, and everyone’s eyelids and eyelashes grow differently, so current eye-tracking technology isn’t well suited for all users. Therefore, the Lanman team will extensively collect more user data to upgrade the eye-tracking technology and strive to make it suitable for more people.

7. Distortion correction: Meta developed a distortion simulator to speed up algorithm iteration

The movement of the pupil can distort the image, thereby reducing the user’s sense of immersion, especially when used with zoom technology. To develop the correction algorithm, researchers must test the adjustment on a physical headset, but producing headsets can take weeks or even months, significantly delaying algorithm iterations. To solve this problem, the Meta research team developed a distortion simulator. With this simulator, researchers can test correction algorithms without producing test headsets and special lenses.

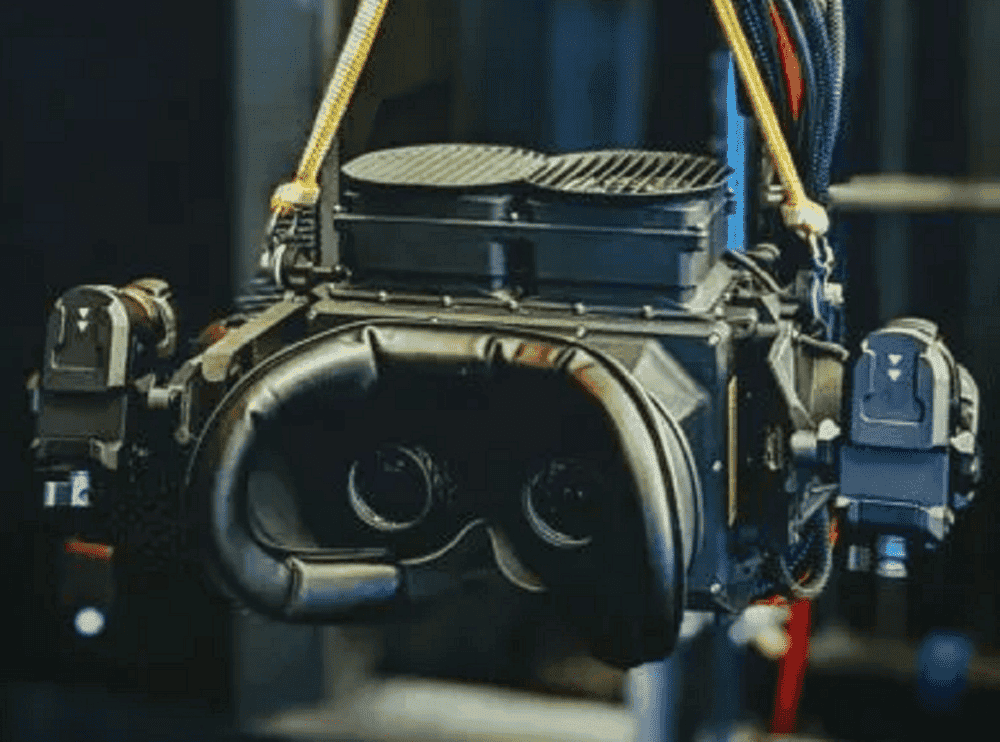

8. High Dynamic Range (HDR): The peak brightness of the Starburst prototype is 20000nit

Physical objects and environments are much brighter than VR headsets, and to address this, Meta created the Starburst prototype. The peak brightness of Starburst is 20000nit, which is 200 times that of the existing Quest 2. Starburst can more realistically simulate enclosed spaces and lighting conditions at night, making virtual environments look more realistic. However, currently, Starburst is heavy and consumes a lot of energy. Meta believes that HDR contributes more to visual realism than resolution and zoom, but the technology is the most distant from practical use.

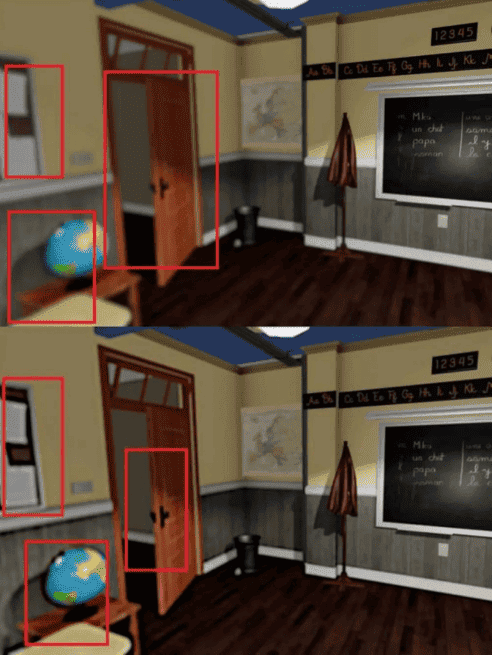

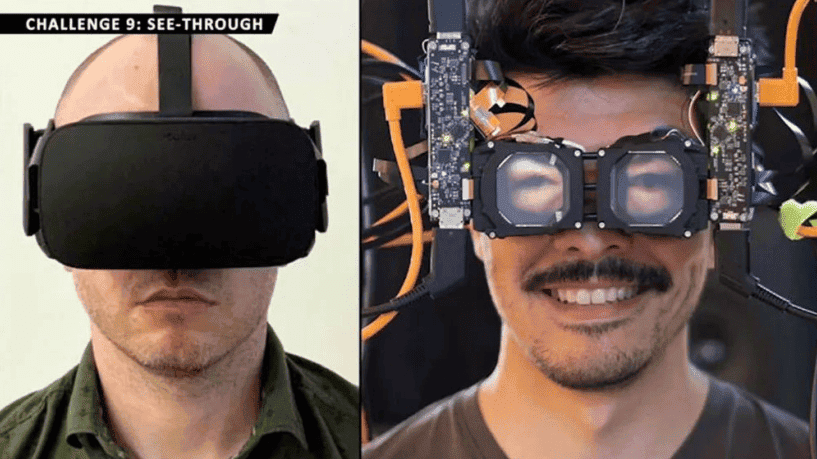

9. Visual realism: Passthrough technology is not yet mature

Better VR headsets should also allow VR users to stay engaged with the real world. VR headsets can record real-world environment information and display it as a video image in virtual reality. This technique, called “passthrough,” has been implemented in commercial VR headsets, but the quality is poor. For example, the Quest 2 offers a black-and-white perspective mode, while Meta’s upcoming high-end VR headset “Quest Pro” aims to pursue higher resolutions and colour perspective capabilities.

However, VR headsets have yet to achieve a perfect reconstruction of the physical environment. The perspective of the picture captured by the perspective technology spatially deviates from the eye, and users may feel uncomfortable when using it for a long time. So, Meta is working on AI-assisted line-of-sight synthesis, which generates perspective-correct viewpoints in real-time with high visual fidelity.

In addition, Meta is working on a “Reverse Passthrough” feature that would allow a real-world user to see the VR user’s glasses and face to make eye contact. But currently, the technology is visually incongruous, unnatural, and far from ready for the market.

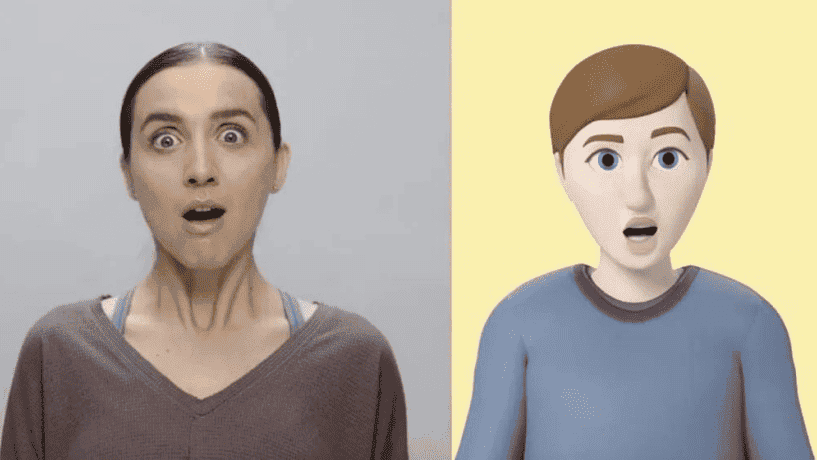

10. Facial reconstruction: Meta conducts research on the “virtual avatar” project

Meta hopes that in the future people will meet in a virtual environment as real as in reality. To that end, Meta is working on a research project called Codec Avatars, which is dedicated to creating 3D digital avatars for people in virtual environments.

Currently, VR headsets can read the facial expressions of VR users in real-time and transfer them into virtual reality. There are reports that the upcoming Quest Pro may be the first device from Meta to offer face-tracking.