In this article, we will look at the development of AI and the field of deep learning. Deep learning originated in the era of vacuum tube computers. In 1958, Frank Rosenblatt of Cornell University designed the first artificial neural network. This was later named “deep learning”. Rosenblatt knew that this technology surpassed the computing power at that time. He said… “With the increase of neural network connection nodes… traditional digital computers will soon be unable to bear the load of calculation”.

Fortunately, computer hardware has improved rapidly over the decades. This makes calculations about 10 million times faster. As a result, researchers in the 21st century are able to implement neural networks. There are now more connections to simulate more complex phenomena. Nowadays, deep learning has been widely used in various fields. It has been used in gaming, language translation, analyzing medical images and so on.

The rise of deep learning is strong, but its future is likely to be bumpy. The computational limitations that Rosenblatt worries about remain a cloud that hangs over the field of deep learning. Today, researchers in the field of deep learning are pushing the limits of their computational tools.

How Deep Learning Works

Deep learning is the result of long-term development in the field of artificial intelligence. Early AI systems were based on logic and rules given by human experts. Gradually, there are now parameters that could be adjusted through learning. Today, neural networks can learn to build highly malleable computer models. The output of the neural network is no longer the result of a single formula. It now uses extremely complex operations. A sufficiently large neural network model can fit any type of data.

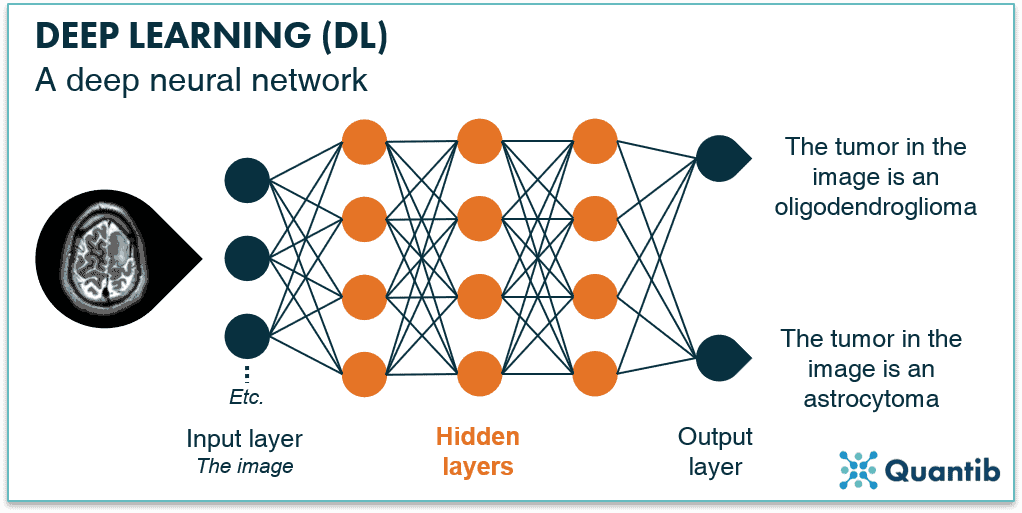

There is a difference between an “expert system approach” and a “flexible system approach”. Let us consider a situation where an X-ray is used to determine whether a patient has cancer. The radiograph will come with several components and features. However, we will not know which of them are important.

Expert systems approach the problem by having experts. In this case, experts in the fields of radiology and oncology. They will specify important variables and allow the system to examine only those variables. This method requires a small amount of calculation. Thus, it has been widely used. But if the experts fail to pinpoint key variables, then the system report will fail.

The way flexible systems solve problems is to examine as many variables as possible. The system then decides for itself which ones are important. This requires more data and higher computational costs. Also, it is less efficient than expert systems. However, given enough data and computation, flexible systems can outperform expert systems.

Deep learning models have massive parameters

Deep learning models are “overparameterized”. This means that there are more parameters than data points available for training. For example, an image recognition system neural network may have 480 million parameters. However, it will be trained using only 1.2 million images. The presence of huge parameters often leads to “overfitting”. This means that the model fits the training data set too well. Thus, the system may miss the general trend but get the specifics.

Deep learning has already shown its talents in the field of machine translation. In the early days, translation software translated according to rules developed by grammar experts. In translating languages such as Urdu, Arabic, and Malay, rule-based methods initially outperformed statistics-based deep learning methods. But as text data increases, deep learning now outperforms other methods across the board. It turns out that deep learning is superior in almost all application domains.

Huge computational cost

A rule that applies to all statistical models is that to improve performance by K, you need 2K data to train the model. Also, there is an issue of over-parameterization of the deep learning model. Thus, to increase performance by K, you will require at least 4K of the amount of data. In simple terms, for scientists to improve the performance of deep learning models, they must build larger models. These larger models will be used for training. However, how expensive will it be to build the larger models for training? Will it be too high for us to afford and thus hold back the field?

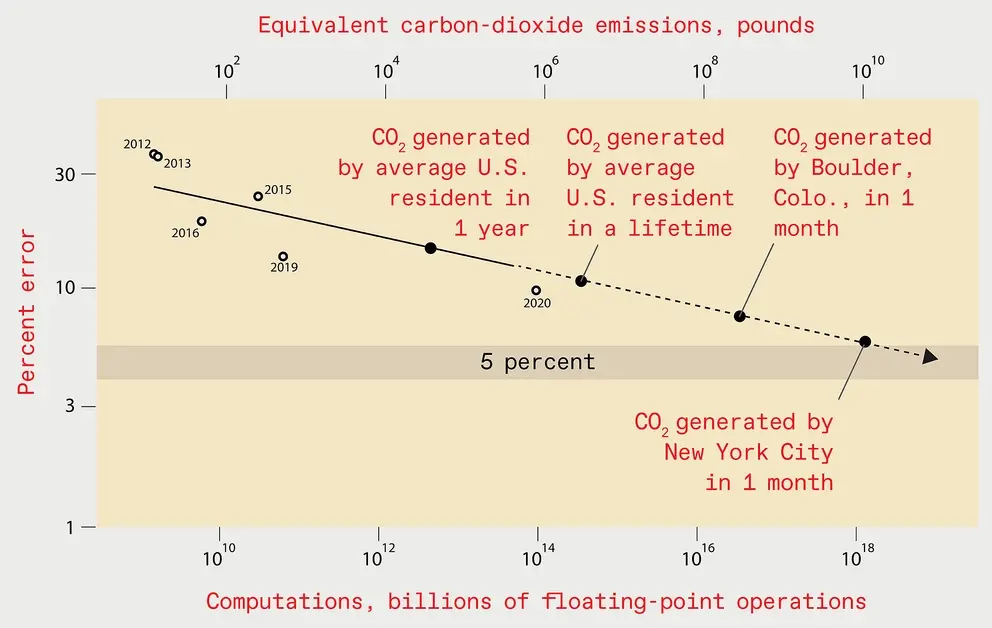

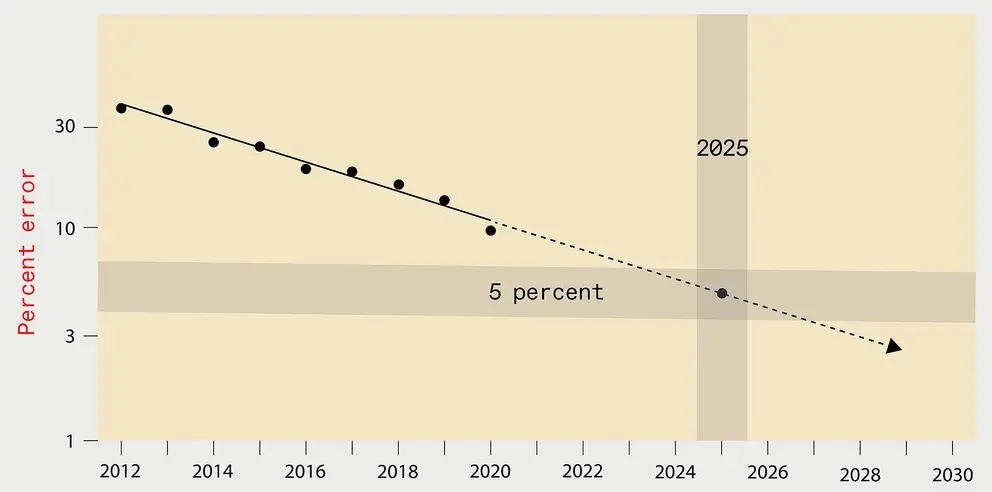

To explore this question, scientists at the Massachusetts Institute of Technology collected data from more than 1,000 deep learning research papers. Their research warns that deep learning faces serious challenges.

Take image classification as an example. Reducing image classification errors comes with a huge computational burden. For example, the ability to train a deep learning system on a graphics processing unit (GPU) was first demonstrated in 2012. This was done with the AlexNet model. However, it took 5 to 6 days of training using two GPUs. By 2018, another model, NASNet-A, had half the error rate of AlexNet. Nevertheless, it used more than 1,000 times as much computation.

Has the improvement in chip performance kept up with the development of deep learning? not at all. Of the more than 1,000-fold increase in the computation of NASNet-A, only a 6-fold improvement comes from better hardware. The rest are achieved by using more processors or running longer, with higher costs.

Practical data are much more than their computations

In theory, to improve performance by a factor of K, we need 4K more data. However, in practice, the computation needs a factor of at least 9K. This means that more than 500 times more computing resources are required to halve the error rate. This is quite expensive, in truth, it is very expensive. Training an image recognition model with an error rate of less than 5% will cost $100 billion. The electricity it consumes will generate carbon emissions equivalent to a month’s worth of carbon emissions in New York City. If you train an image recognition model with an error rate of less than 1%, the cost is even higher.

By 2025, the error rate of the optimal image recognition system will reduce to 5%. However, training such a deep learning system would generate the equivalent of a month’s worth of carbon dioxide emissions in New York City.

The burden of computational cost has become evident on the cutting edge of deep learning. OpenAI, a machine learning think tank, spent more than $4 million to design and train. Companies are also starting to shy away from the computational cost of deep learning. A large supermarket chain in Europe recently abandoned a system based on deep learning. The system was to predict which products would be purchased. The company’s executives concluded that the cost of training and running the system was too high.