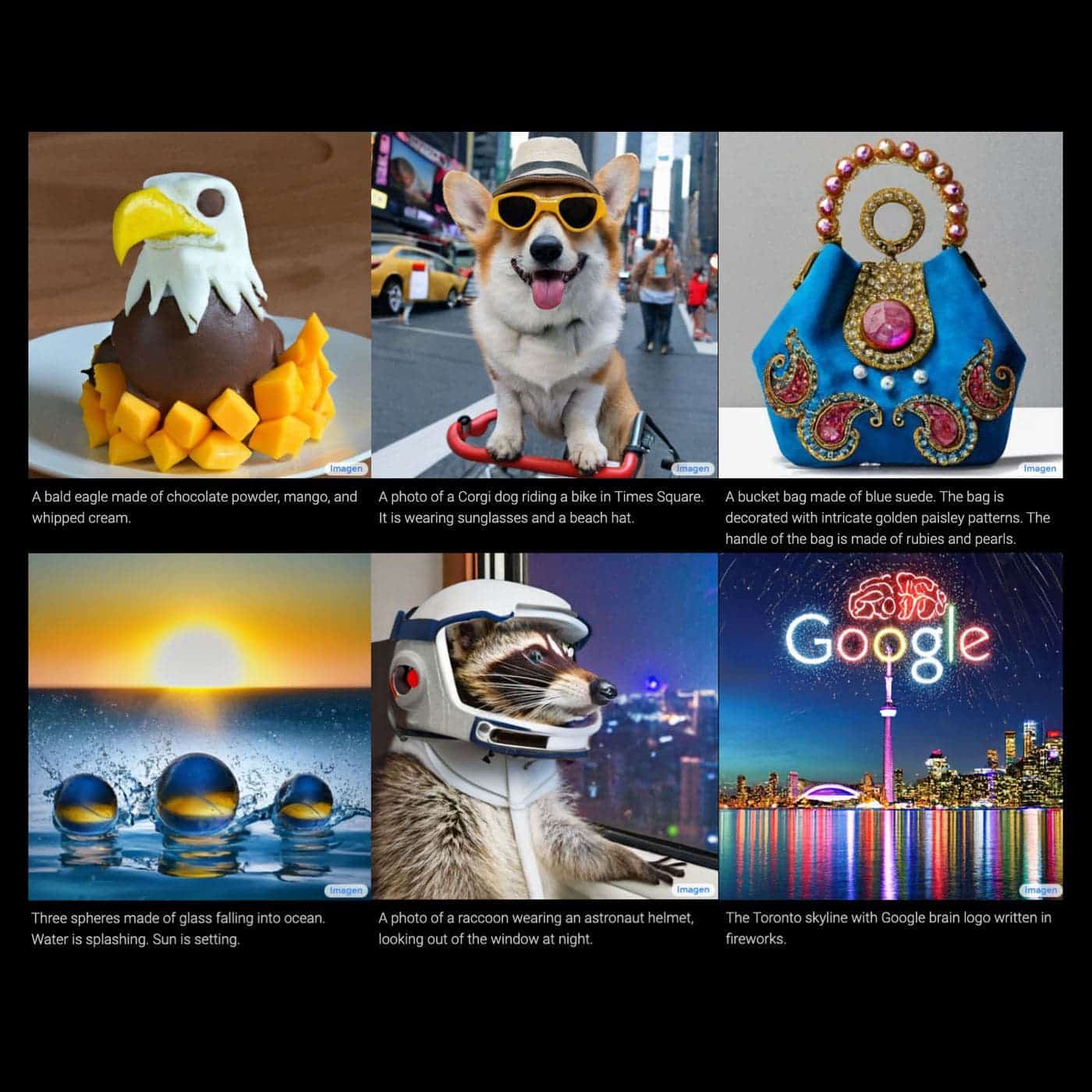

In the metaverse, there will be rules different from the universe we are living in. Practically, anyone will be able to create anything coming to mind. In some sense, there will be no rules. But as we are talking about a new world, where computers and humans will live and work side by side, it’s reasonable to know how this collaboration will take place. One of such collaborations is text-to-image generation. It is very popular now. For instance, OpenAI offers its Craiyon, while Google boasts of Imagen AI. Yesterday, Meta, which is one of the leading players in the field, announced its own-developed AI image generation engine. As the company said, the new engine will help people create more immersive art in the metaverse.

Meta Has Its Own Text-to-Image Generation Engine

On paper, text-to-image generation is a simple thing. For instance, when you say the keyword “there’s a horse in the hospital,” the engine first passes it through a transformer model, a neural network, then it “understands” what you said and develops a contextual understanding of their relationship to one another. Once it does all the steps mentioned above and realizes what you meant, it will create an image with the help of AI, using a set of GANs (generative adversarial networks).

Due to the development of machine learning and its ability of self-training, text-to-image generation engines are able to create any nonsense you want. We can say that all engines work in the same principle. However, they differ in terms of AI processing.

For instance, Google’s Imagen prefers a Diffusion model, “which learns to convert a pattern of random dots to images. These images first start as low resolution and then progressively increase in resolution.” Google’s Parti AI, on the other hand, “first converts a collection of images into a sequence of code entries, similar to puzzle pieces. A given text prompt is then translated into these code entries and a new image is created.”

However, you should know that as a user, you have no control over the specific aspects of the output image. “To realize AI’s potential to push creative expression forward,” Meta CEO Mark Zuckerberg stated in Tuesday’s blog, “people should be able to shape and control the content a system generates.”