Samsung Launches TRUEBench to Measure AI in Real-World Work

SamsungSaturday, 27 September 2025 at 03:06

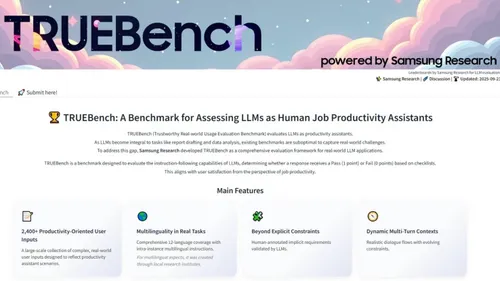

Samsung has introduced TRUEBench, a

new benchmark designed to show how AI performs in real

workplace settings. Instead of focusing on narrow academic tasks, TRUEBench

runs AI systems through everyday jobs people actually use them for.

The

benchmark covers 2,485 scenarios across ten categories, 46 subcategories, and

twelve languages. Tasks range from quick prompts to processing long documents

with more than 20,000 characters.

Read also

Designed for Real Work

Unlike many AI benchmarks that test

simple question-and-answer performance in English, TRUEBench looks at practical

office tasks. It includes translation, document summarisation, data analysis,

and multi-step instructions where the AI must remember context.

The idea is to

capture the variety of activities users depend on in daily work, from short

commands to full-length business reports.

Strict Scoring Rules

Passing a TRUEBench test isn’t easy.

For each task, the model must satisfy every condition, including unspoken

expectations a reasonable user would have.

If any part is missing, the result

counts as a failure. This “all-or-nothing” scoring makes the benchmark tougher

but also closer to how people judge whether an AI response is useful.

Samsung built

the rules through a mix of human and AI input. Annotators wrote the conditions,

AI flagged conflicts, and humans refined them before finalising the framework.

Once set, the tests could be run at scale through automated AI scoring.

Transparency for Developers

To build trust, Samsung has

published the dataset, leaderboards, and output statistics on Hugging Face. They

have provided users with the ability to compare up to five models and judge

them side by side. With the data made public, researchers and developers can

judge the benchmark on their own.

Strengths and Limits

TRUEBench is a bold new AI test, yet it is not free of weak points. At times, a reply that may aid a user is still marked wrong by its code. The tool does work with twelve languages, which is a step up. Still, in languages with less training data, the endpoints can shift and lose a firm base.

It also tilts more to tasks tied to business use. Fields such as law, health, or deep science work may not gain full show in this test. So while TRUEBench is a fresh step, it shows both the hope and the gaps of new ways to gauge AI.

Samsung’s Outlook

Paul (Kyungwhoon) Cheun, CTO of Samsung’s DX group and head of Samsung Research, said the firm sees TRUEBench as a new base for AI tests. He added that the tool will raise the bar for how AI skill is gauged and will help boost Samsung’s hold in the field. By leaning on clear steps and real use cases, Samsung wants TRUEBench to be a hard but fair way to track what AI can, in fact, what it does now.

Loading